How to do Inpainting with Stable Diffusion API?

Written on . Posted in Stable Diffusion API.

Providing API as a service for picture generation, Stable Diffusion API is a cutting-edge business. The Stable Diffusion API enables users to quickly create high-quality images from text inputs with the use of API endpoints. The API provides a selection of models that may be used to create images, making it appropriate for a number of applications, including the development of visual content.

The API's integration of Stable Diffusion technology helps to guarantee that the images produced are accurate and consistent, in addition to being aesthetically pleasing. For companies and people who want to rapidly and easily generate compelling visual material, the API is the perfect solution.

In this tutorial, we will discuss the Inpainting endpoint offered by Stable Diffusion API. We will see how to generate images using the Inpainitng API and discuss the various parameters that this endpoint offers for image customization. Before that let's see how to start setting up an account with Stable Diffusion API and get the API key.

Getting started with the Inpainting API

Setting up an Account

To get the API key, you need to create an account. Sign up here on the official website as a first step. Then you can see your API key on the dashboard. Your dashboard will look like below

To view the API key you need to click the ‘View’ option present on the dashboard. You will find your API key. Once you are done with this, you can start making API calls to generate images using different programming languages. We will see how to make API calls in Python using the requests module.

Making API calls

We can interact with the API endpoint using the requests module or the http.client module. Below is the code using the requests module.

import requests

url = "https://stablediffusionapi.com/api/v3/inpaint"

payload = {"key": "Your API key", "prompt": "a cat sitting on a bench", "init_image": "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png", "mask_image": "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png", "width": "512", "height": "512", "samples": "1", "steps": "20", "safety_checker": "no", "enhance_prompt": "yes","guidance_scale": 7.5,"strength": 0.7}

headers = {}

response = requests.request("POST", url, headers=headers, data=payload)

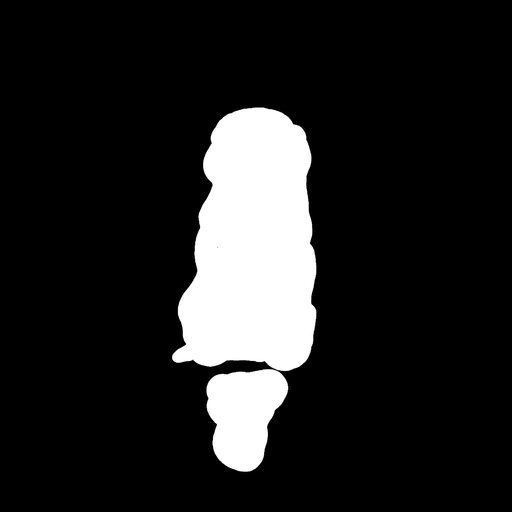

print(response.text)We first imported the requests module. Then we gave the API endpoint URL and created a payload variable to use the parameters for generating the Image. For Inpainting, we need two images. The first one is the base image or ‘init_image’ which is going to get edited. The second one is the mask image which has some parts of the base image removed. In the output image, the masked part gets filled with the prompt-based image in the base image.

The mask image of the above image looks like the below:

The final output of the code produces a link to the image. The final image of the above generation looks like the one below:

We can see that the dog is replaced with the cat in the masked part with better accuracy. This is how Inpainting works with Stable Diffusion API. We can also interact with this API using the http.client method and also using several other languages like c, c++, etc.,

Parameters for the Inpainting Endpoint

The following parameters are available for the Inpainting endpoint in the Stable Diffusion API. We can customize the image generation using these parameters:

{

"key":Your API key

"prompt": A sentence to generate the image

"negative_prompt": A sentence describing the things you don’t want in the image.

"init_image":Base image from which new image needs to be generated

"mask_image":Mask of the image

"width": Width of the output image. Maximum size is 1024x768 or 768x1024

"height": Height of the output image. Maximum size is 1024x768 or 768x1024

"samples": The number of images to be generated

"steps": The number of denoising steps(Minimum:1, Maximum:50)

"guidance_scale": Scale for classifier-free guidance(Minimum:1, Maximum:20)

"safety_checker": A filter for NSFW content

"enhance_prompt": Enhances prompt for better results

"strength": This corresponds to the prompt strength

"seed": A random seed to generate image

"webhook": A webhook to get the generated image

"Track_id": A tracking id to track your API call

}Advantages of the Inpainting endpoint of Stable Diffusion API

The main advantages of using the inpaint endpoint are as follows:

- Versatility: The inpainting endpoint can be used for a wide range of applications, including restoring damaged photos, removing unwanted objects from images, and more. This versatility makes it an ideal solution for organizations that need to generate high-quality images for a variety of purposes.

- Time-saving: With the inpainting endpoint, customers can save time and resources compared to manual image editing processes. It can handle high volumes of requests, providing results quickly and efficiently.

- Customizability: With the inpainting endpoint, users can specify the exact area to be inpainted and adjust parameters such as the level of detail and style, resulting in highly customized images that meet their specific requirements.

Conclusion

The inpainting endpoint offered by Stable Diffusion API is a powerful tool for generating high-quality images quickly and easily. With its time-saving features and customizability options, the inpainting endpoint is an ideal solution for organizations looking to streamline their image generation processes.

If you're interested in learning more about the inpainting endpoint and how it can benefit your organization, be sure to check out Stable Diffusion API. And don't hesitate to reach out to us for more information or to get started. With the inpainting endpoint, you can take your image generation capabilities to the next level!