Choosing the Best AI Models for Your Business in 2024

Written on . Posted in AI.

AI Models are advancing in every industry, but many leaders are confused about what each LLM can do and how much it will cost to use and customize them. As a result, some businesses just pick the random AI model, without much thought.

Starting with a popular one can be a good first step. But, LLMs are becoming so important for staying competitive that you shouldn’t depend solely on choices made by others.

It’s essential to explore other options and understand that no single LLM will solve every problem. Be ready to look beyond your usual suppliers to find the best solution for your needs.

Recently, Artificial Analysis AI evaluated over 50 AI models and more than 15 API providers, creating various comparison graphs. In this blog, we’ll break down these graphs and provide you with a clear overview of the top AI models to help you choose the best one for your needs.

Comparative Analysis of AI Models

When evaluating AI models, it's important to understand their performance across several critical metrics, including quality, output speed, latency, price, and context window size. Here’s an in-depth comparison of the top models based on these metrics.

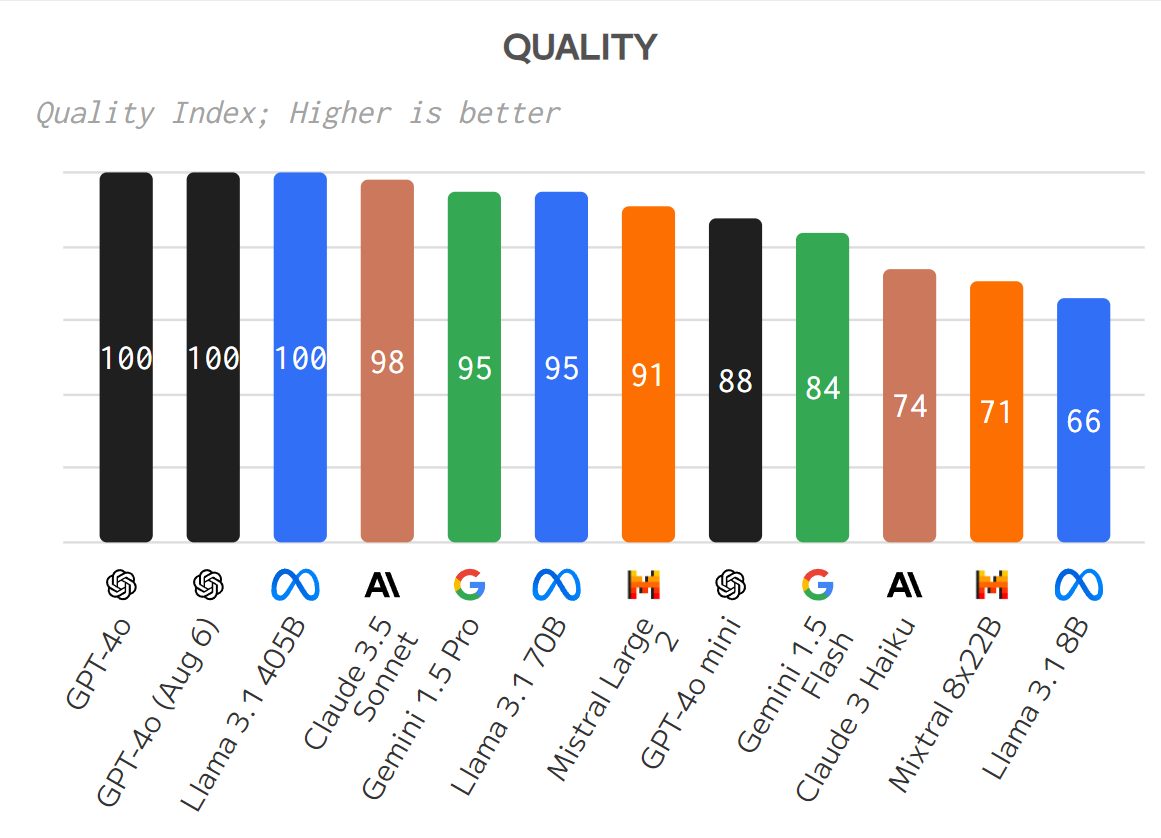

Quality

The quality of a model is a measure of its overall performance and effectiveness. It includes aspects such as accuracy (correctness of results), generalization (ability to handle new data), consistency (reliability across tasks), speed (how quickly it generates results), robustness (performance with noisy or incomplete data), and relevance (how well it meets user needs).

Quality is assessed through benchmarks, user feedback, and performance metrics, often compared with other models to gauge its relative effectiveness.

GPT-4o, GPT-4o (Aug 6) & Llama 3.1 405B: These models are recognized for their exceptional quality across general reasoning and specific benchmarks like Chatbot Arena and MMLU. They consistently achieve the highest scores, reflecting their advanced capabilities in understanding and generating text.

Claude 3.5 Sonnet: It also demonstrates high-quality outputs, though slightly below the GPT-4o models. It is known for strong reasoning and knowledge abilities but may not match the latest GPT models in all aspects.

Gemini 1.5 Pro & Llama 3.1 70b: These models provide robust performance but are less advanced compared to the top-tier models. They are effective for many use cases but might fall short in very complex scenarios.

Here is the comparison of AI Models based on Different Abilities:

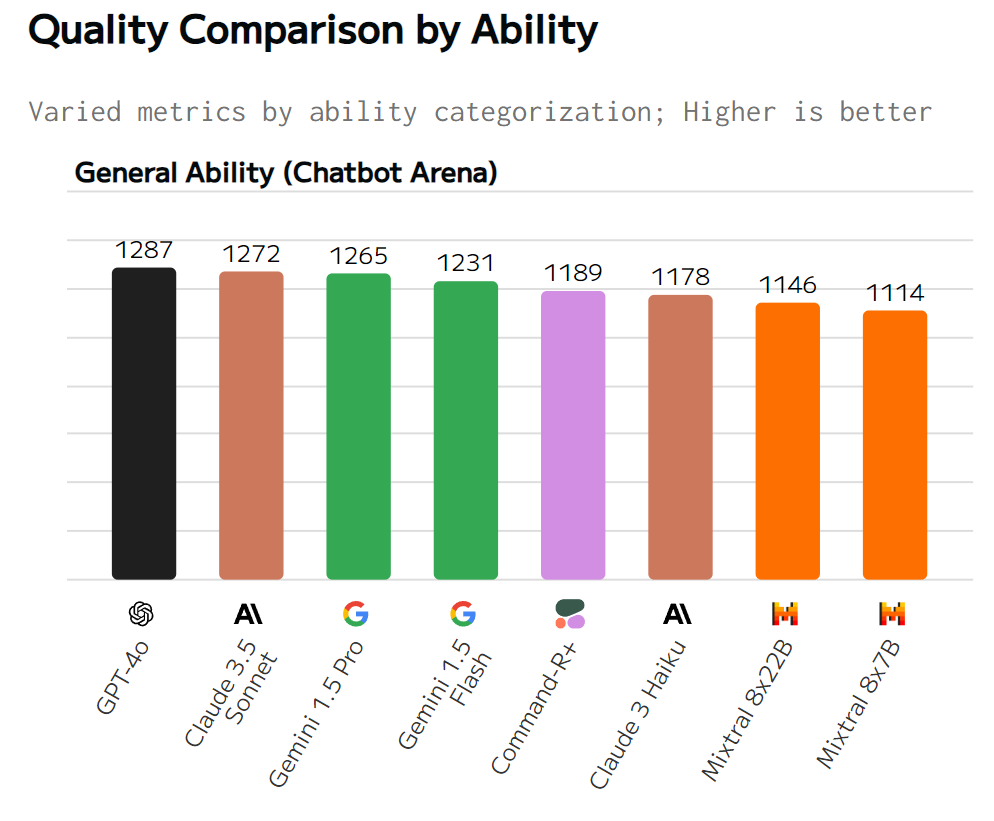

General Ability (Chatbot Arena)

General Ability (Chatbot Arena) measures a model's overall performance across diverse tasks, such as answering questions and carrying on conversations. It reflects how well a model handles different challenges in a competitive environment, with higher scores indicating greater versatility and effectiveness. Here are the top models based on general ability:

GPT-4o: This model is known for its excellent understanding and text generation. It handles a wide range of tasks very well, making it the top choice for both general and specific uses.

Claude 3.5 Sonnet: Claude 3.5 Sonnet is strong in reasoning and knowledge. It performs well in many situations, closely following GPT-4o, though it's a bit less versatile overall.

Gemini 1.5 Pro: Gemini 1.5 Pro is reliable and handles complex tasks effectively. It's a solid choice for most needs, even if it’s not quite as powerful as the top models.

Gemini 1.5 Flash: This model is consistent and dependable. While it might not be the best for very challenging tasks, it’s great for everyday use, which is why it ranks highly.

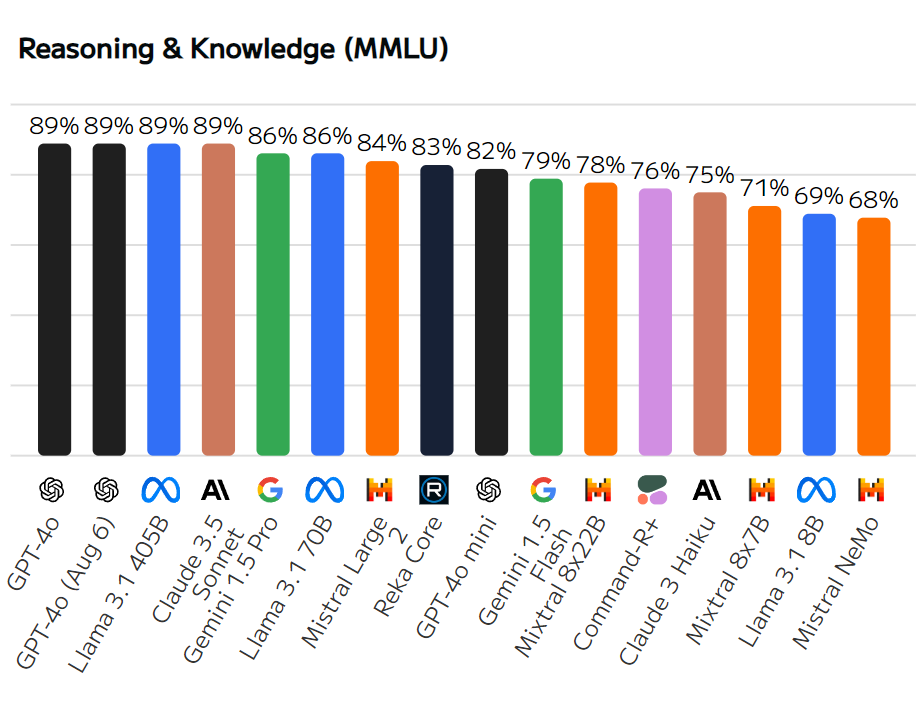

Reasoning and Knowledge

Models are evaluated for Reasoning & Knowledge using benchmarks like MMLU, which involve testing them on various tasks requiring understanding and problem-solving. These tasks cover different categories, such as general knowledge and logic.

The models' performance is measured by how accurately they complete these tasks, with higher scores indicating better reasoning and knowledge abilities. Here are the top AI Models based on Reasoning and Knowledge:

GPT-4o, GPT-4o (Aug 6), Llama 3.1 405B, Claude 3.5 Sonnet: These models are all top performers, each scoring 89% in reasoning and knowledge. They excel at understanding complex information and generating accurate responses across a wide range of topics, making them highly reliable for tasks that require deep knowledge and strong reasoning abilities.

Gemini 1.5 Pro, Llama 3.1 70B: Both models are strong contenders with an 86% score. Gemini 1.5 pro and Llama 3.1 70B handle reasoning and knowledge tasks effectively, making them solid choices, though slightly below the leading models.

Mistral Large2, Reka Core: These models score 84% (Mistral Large2) and 83% (Reka Core), respectively. They perform well in reasoning and knowledge but are a bit less advanced than the top-tier models.

GPT-4o mini: With an 82% score, this model is effective but not as strong as the higher-ranking models. It’s good for many tasks but may struggle with the most challenging ones.

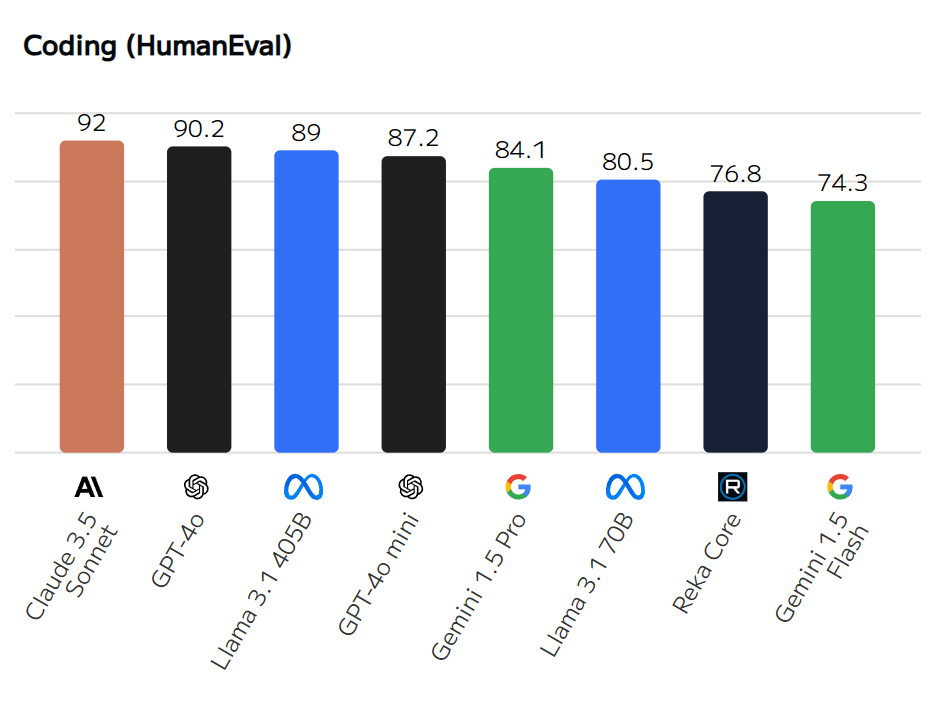

Coding (HumanEval)

Coding (HumanEval) assesses AI models on their ability to write and solve code. Models are tested with various programming tasks and scored based on how accurately they complete them. Higher scores indicate better performance in generating and understanding code. Here are the top AI Models based on Coding performance:

Claude 3.5 Sonnet: Scoring 92, this model leads due to its high proficiency in generating correct and efficient code for complex tasks.

GPT-4o: With a score of 90.2, GPT-4o performs strongly in coding tasks, showing advanced capabilities in understanding and solving a wide range of programming problems.

Llama 3.1 405B: This model scores 89, reflecting its strong coding skills and ability to handle diverse coding challenges effectively.

GPT-4o mini: Scoring 87.2, GPT-4o mini is also highly capable in coding tasks, though slightly less advanced than its larger counterparts.

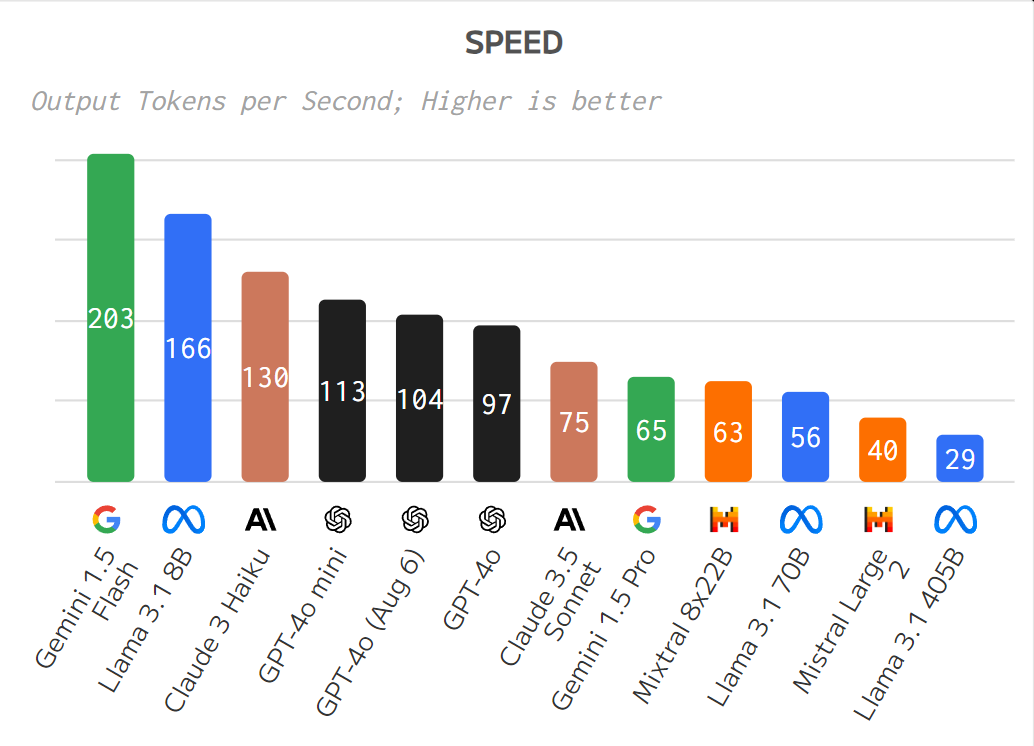

Output Speed

Output speed refers to how quickly a model generates results, typically measured in tokens per second. It indicates the efficiency of the model in processing and delivering responses.

Higher output speed means the model can handle requests and produce results faster, which is crucial for applications requiring real-time or near-real-time responses. Output speed is often balanced against quality, as faster models might trade off some accuracy or detail for speed.

Output speed measures how quickly a model generates text.

Gemini 1.5 Flash: With the fastest speed at 171 tokens per second, Gemini 1.5 Flash excels in scenarios where quick responses are critical.

Llama 3.1 8B: Close behind, this model processes 165 tokens per second, making it highly efficient for tasks requiring rapid output.

Claude 3 Haiku & GPT-4o (Aug 6): These models also perform well in terms of speed, though not as fast as Gemini 1.5 Flash. They strike a balance between output speed and quality.

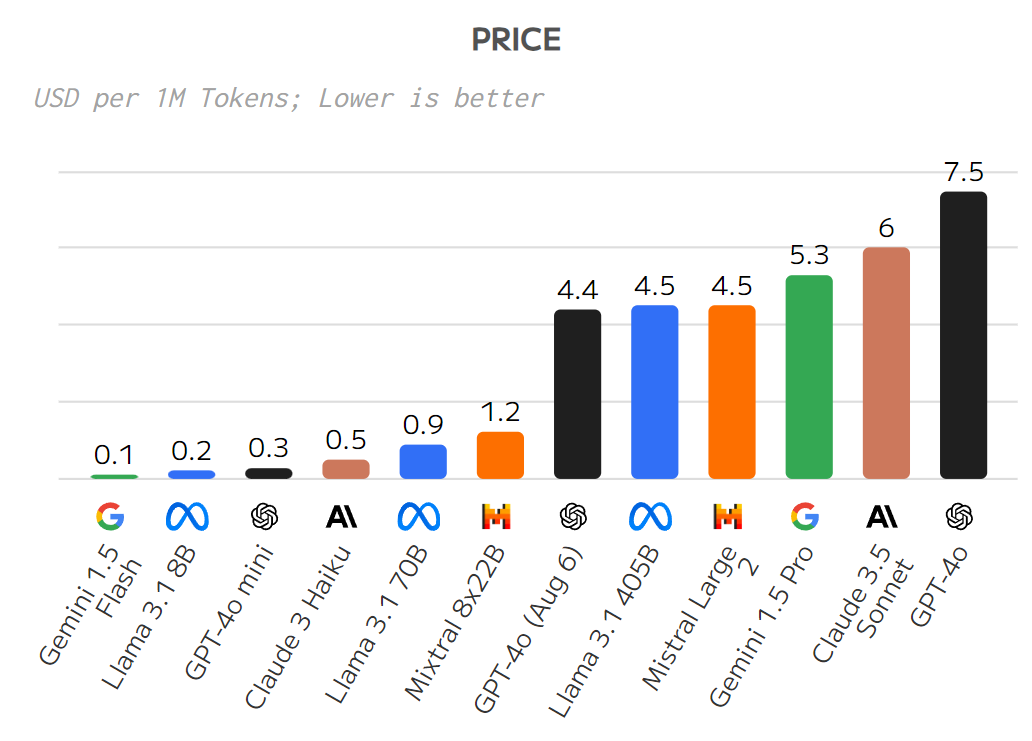

Price

Gemini 1.5 Flash and Llama 3.1 8B: Models like Gemini 1.5 Flash and Llama 3.1 8B are the most cost-effective, with prices starting as low as $0.1 per million tokens. These models offer the lowest cost for token usage.

As models become more advanced or larger in scale (e.g., GPT-4o and its variants), the cost per million tokens generally increases. For example, GPT-4o costs $7.5 per million tokens, making it significantly more expensive than lower-ranked models.

Smaller or less complex models, such as Llama 3.1 8B, are cheaper compared to larger and more complex models like Llama 3.1 405B, which is priced higher.

Price vs. Quality Relationship

There is a general trend that higher quality models are more expensive. However, not all models follow this curve strictly. Some models may offer high quality at a lower price compared to others.

High Quality and High Price: Models like GPT-4o and GPT-4o (Aug 6) fall into this category. They provide high-quality results but come at a higher cost.

High Quality and Low Price: Some models, like Gemini 1.5 Flash and Llama 3.1 8B, offer relatively high quality at a lower price, making them cost-effective options.

Low Quality and Low Price: Models in this range are cheaper but may offer lower performance.

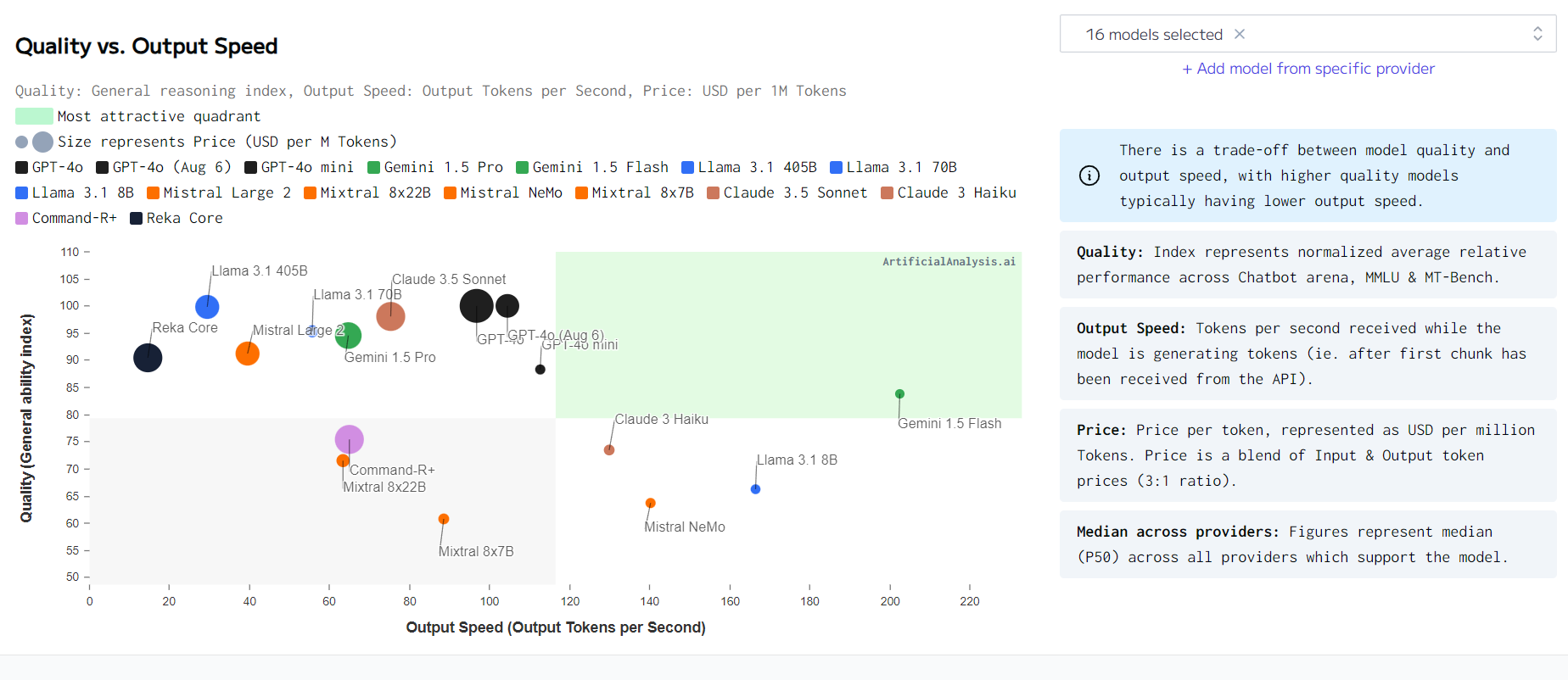

How to choose the right AI Model for your business?

Start by determining what you need from the AI model, like how accurate or fast it should be, and how much you can spend. For instance, if you need a chatbot that works well but you’re on a tight budget, look for models that offer good performance at a lower cost.

Then, compare models at different price points. If you have a small budget, check out lower-cost models to see if they meet your needs. If you have more to spend, you can choose better-performing models. The graph will help you see which models offer the best balance between price and quality.

Finally, look for models that give you high quality without a high price. Avoid spending too much on features you don’t need. Also, consider how fast the model works and how much it costs per use. For example, a model with a lower cost per million tokens might be a better deal for you.

Output Speed vs Price

Models in this quadrant offer a good balance of high output speed and low price, making them desirable choices for businesses seeking both efficiency and affordability.

Generally, faster models (high output speed) may come with higher prices, and vice versa. The chart helps visualize how this trade-off plays out across different models.

How to choose the right AI Model for your business?

Start by figuring out your needs for speed and budget. Determine how fast the model needs to be and how much you can afford to spend. For high-speed requirements, choose models that offer fast performance. Make sure the cost per use fits your budget.

Next, find a model that offers a good balance between speed and cost. Look for models that provide high performance without breaking the bank. If speed is essential and you have a bigger budget, go for models with high output speed even if they cost more. For tighter budgets, select models that still provide decent speed at a lower price.

Consider the specific needs of your application. For instance, if you need to handle many requests quickly, prioritize faster models. For less urgent tasks, a slower, more affordable model might be sufficient. Use a chart to compare different models and see which ones offer the best balance of speed and cost.

Finally, choose models that give you good performance without overspending. Aim for a model that provides a reasonable speed at a fair price, and avoid paying extra for features you don’t need.

Conclusion

Choosing the right AI model can be tricky, especially with so many options available. Starting with a popular model might be a good idea, but don't stop there. Explore other models to find the best fit for your needs and budget.

Consider factors like quality, speed, and cost. For example, GPT-4o and Claude 3.5 Sonnet are great for high-quality tasks, while Gemini 1.5 Flash is fast and cost-effective for quick responses.

To choose the best model, first, figure out your needs and budget. Use comparison charts to see which models offer the best balance of performance and cost. Aim for models that provide good value without overspending. This way, you'll find an AI model that meets your needs and fits your budget.

Ready to use AI models without the hassle of managing GPUs? Explore Modelslab's API, designed to help you build next-generation AI products effortlessly. Sign Up for Free!