How to Enhance AI LLM Test Prompts: A Comprehensive Guide

Written on . Posted in AI.

There are thousands of LLM APIs available, but which one is best for your business, or which one performs the best? Evaluating these models properly can help you find one that is accurate, reliable, and meets your needs.

One of the best ways to test them is by using AI LLM test prompts. These prompts help measure how well a model can generate language, solve problems, and provide correct information.

In this article, we'll explain why AI LLM test prompts matter, how to create them, and tips for using them effectively.

What are AI LLM Test Prompts?

AI LLM test prompts are instructions used to evaluate large language models (LLMs). They tell the model what task to perform, like summarizing an article, answering a question, or creating a story. The way these prompts are written affects how well the model responds.

Clear and relevant prompts help the model give better answers. If you’re a developer, tester, or just interested in AI, knowing how to create and use these prompts is important for making better AI systems.

Importance of AI LLM Test Prompts

The growth of large language models (LLMs) like GPT, Gemini, and BERT has introduced powerful tools for tasks ranging from content creation to solving complex problems.

However, it’s essential to regularly assess how well these models perform in different situations. AI LLM test prompts are vital for this evaluation because they:

Evaluate Model Performance: Test prompts help pinpoint where the LLM performs well and where it struggles, such as in understanding, reasoning, and creativity.

Ensure Model Safety: They are used to check for biases or harmful outputs, which is important for maintaining ethical standards in AI systems.

Improve AI Reliability: Regular testing with prompts allows developers to refine the models, making them more dependable and effective over time.

Crafting Effective AI LLM Test Prompts

Creating effective AI LLM test prompts is essential for accurately evaluating the capabilities of large language models (LLMs). The following examples illustrate various skills that can be assessed through specific prompts:

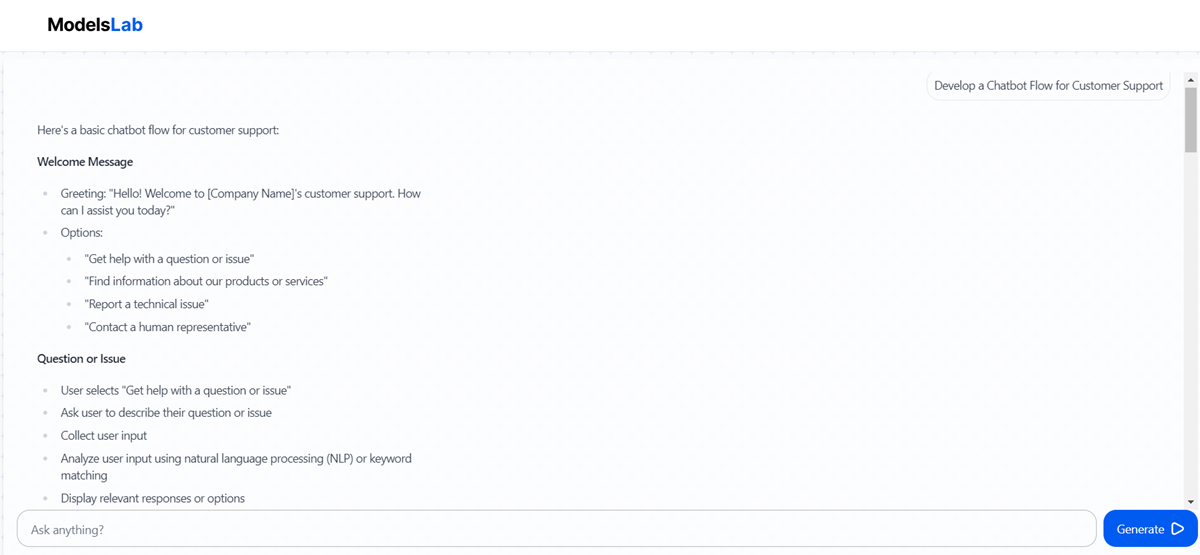

Develop a Chatbot Flow for Customer Support

Test how well an LLM can create structured dialogues by asking it to design a flow for handling common customer support queries. This prompt evaluates the model’s understanding of conversational dynamics and customer needs.

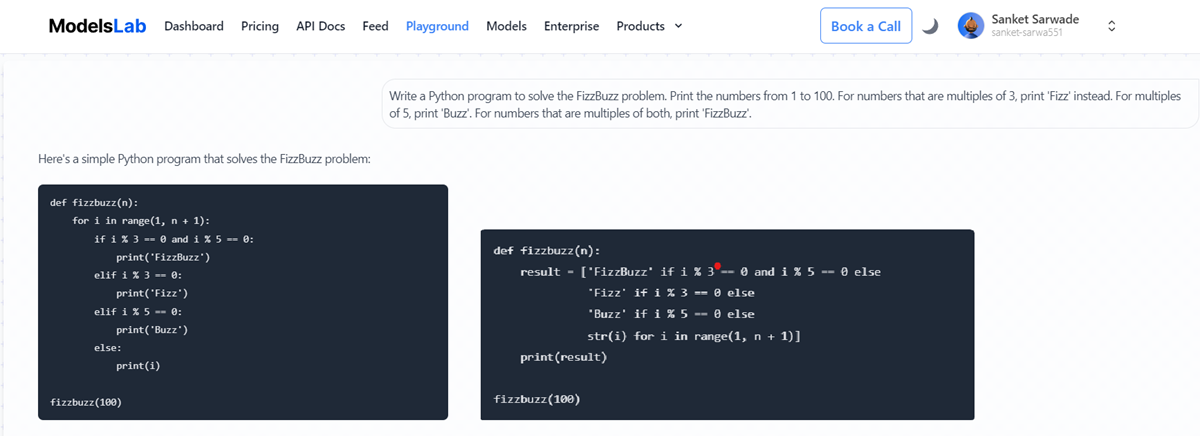

Generate Code to Solve FizzBuzz in Python

Challenge the LLM to create functional code snippets for a specific programming task or algorithm problem. This prompt assesses the model’s coding skills and its ability to understand technical requirements.

Rewrite this Paragraph in a Professional Tone

Test how well the model can adjust writing tone by converting casual language into formal or business language. This prompt evaluates its understanding of the audience and context.

Answer These Customer FAQs Accurately and Concisely

See if the LLM can correctly respond to frequently asked questions, ensuring accurate and brief answers. This prompt assesses the model’s ability to provide relevant information quickly.

Translate this Technical Document into [Local Language]

Evaluate the model’s multilingual capabilities by asking it to translate complex, domain-specific content into another language. This prompt tests its accuracy and understanding of technical terms in different languages.

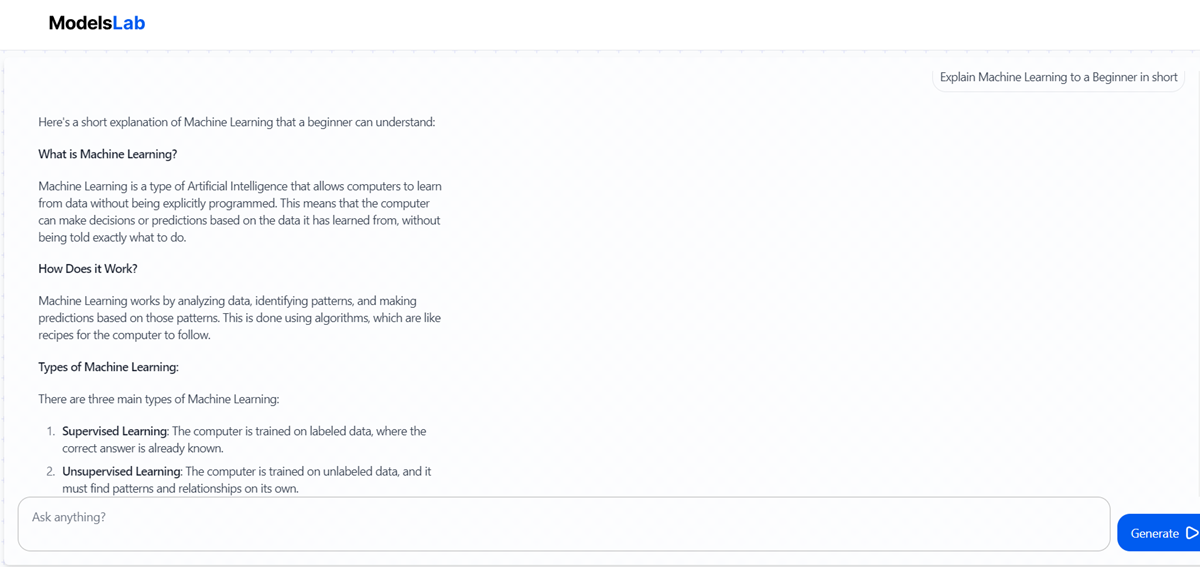

Explain Machine Learning to a Beginner

Assess the LLM’s ability to simplify complex topics by requesting a layman-friendly explanation of a technical subject like machine learning. This prompt evaluates its communication skills and ability to engage a non-technical audience.

Generate a Creative Blog Post Outline on [Specific Topic]

Challenge the model’s creativity by asking it to create a detailed outline for a blog post with an SEO-friendly structure. This prompt tests its ability to organize ideas effectively and understand content strategies.

Identify and Fix Errors in this Code

Provide buggy code and test if the LLM can spot the issues and correct them effectively. This prompt assesses its problem-solving skills and attention to detail.

Create a Step-by-Step Guide on How to Set Up a Web Server

Evaluate the model’s ability to generate precise and instructional content by prompting it to write a detailed setup guide for a technical process. This prompt tests its instructional clarity and technical understanding.

Checkout Our Latest Blog: Choosing the Best AI Models for Your Business in 2024

Best Practices for AI LLM Test Prompts

Here are some of the best practices you must follow:

Test with Different Scenarios

Creating prompts that mimic real-life situations is important for testing how well the model performs. For example, using role-playing prompts allows the model to respond based on specific contexts, which helps evaluate its flexibility. This ensures the model can handle various situations effectively.

Evaluate Performance Across Dimensions

When checking the model’s outputs, it’s essential to look at different aspects of its performance. This includes accuracy (how correct the answers are), relevance (how well the response matches the question), and creativity (how original the response is). By evaluating these areas, you can gain a better understanding of the model's strengths and weaknesses.

Define Clear Objectives

Setting clear goals is crucial when creating test prompts for LLMs. Decide what you want to assess, such as language understanding or creativity. Clearly defining what a successful response looks like will help ensure that your prompts effectively measure what you want to know.

Use Diverse Prompt Types

Using a mix of different types of prompts can improve how you assess the model. Open-ended questions can encourage detailed answers, while multiple-choice questions test specific knowledge. Fill-in-the-blank prompts check how well the model understands context. This variety provides a fuller picture of the model’s abilities.

Ensure Clarity and Precision

It’s important to use clear and simple language in your prompts to avoid confusion. Simple wording helps the model understand what you want. Avoiding jargon makes prompts easier to read and respond to. This clarity leads to more accurate and relevant answers from the model.

Document Findings

Keeping track of what you find during testing is essential for future reference. Write down different prompts and the responses they generate. This documentation helps you analyze the results. By updating your testing methods based on these findings, you can continually improve how you evaluate the LLM.

Conclusion

AI LLM test prompts are vital for assessing the performance, safety, and reliability of large language models. By creating clear and structured prompts, developers can optimize LLMs and enhance their functionality.

Using LLM APIs makes testing more efficient by streamlining prompt management and evaluation. With ongoing testing and refinement, AI systems can become stronger and more trustworthy. By following these best practices, you can develop more effective AI LLM test prompts, resulting in better performance and more reliable outcomes.

Try Modelslab's LLM API today to improve your AI development!